- Sep 12, 2024

Who Should Take Point on an AI Project?

- Dr. Joseph Shepherd

- Program-Management

- 0 comments

A lot has changed in the software world over the last year. Data has always been top-of-mind for product teams and systems integrators, but the rapid adoption of AI means everyone has had to not only learn about data science, but they’ve had to find ways to integrate it into every aspect of their solutions. You see, no one wants to build a product or solution these days unless it has some aspect of AI. While some of this will lessen as the hype cycle winds down, we need to plan for a world where software development and data science are co-pilots on our innovation journey. How does one do that though when software engineering and data science are so drastically different?

Who's in Charge Here?

Who leads the project? Is it engineering or data science? I hear this question a lot and most customers default to the easy answer: if it's a data science project then data science leads. This answer however is flawed and ignores so very much. Now I could take the easy way out myself and tell you it depends, but I wouldn't be worth my salt then now, would I? Instead, I'll give you the model to answer this question and then we can unpack the logic behind it.

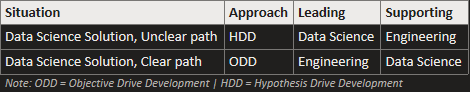

In the model below I've described the situation we find ourselves in and, based on that situation, the approach we should take, who should lead, and who supports.

It's What You Know That Matters

The only question that really matters is: do we have a clear path forward? Most customers believe that a data science project should be led by the data science team but that isn't always true. There are times when it makes perfect sense for the engineering team to take point on a data science project. Just hear me out.

Data Science Solution, Unclear Path

If we are unsure of our path forward in a data science project, then who better to answer the question than the data science team? We are squarely in a state of exploration and experimentation and the primary goal is to find a viable path forward. To do that we need to be able to conduct experiments as quickly and effectively as possible. At this stage the team should employ a Hypothesis Driven Development (HDD) approach where the DS team's focus is on iterative experimentation. These of course should be measurable and based on real customer signal. The backlog should be a series of experiments versus features since the team doesn’t yet know how to solve the problem and the team's focus should be on maximizing experiment quality and throughput. This doesn't mean it's all on the data scientists with engineering sitting on their hells, waiting for the signal to join. Not at all. If maximizing experiment quality and through put is the goal at this stage then engineering should be focused on building rapid experimentation capabilities which allow the data science team to run experiments, collect feedback from users, and iterate as quickly as possible. These capabilities aren't wasted effort because, in true data science projects, the need to experiment quickly never really goes away. Data changes, new data comes in, conditions change, all of which may require new experimentation.

Data Science Solution, Clear Path

Now we fast forward in time, we've run our experiments, and we have strong signal that we are on the right path. Once you find that path forward the roles of the team switch. Why? We've entered a new stage in the solution development lifecycle. We are no longer experimenting; we have a path and it's time to get after it. At this stage, everyone is focused on implementation. The data scientists are finalizing models and making them robust, they are considering where data lives, how its accessed and what transformations it needs to undergo to be of use. The engineers then shift from building a supporting experimentation platform to building an actual product that transfers value to the end user. The whole team shifts away from HDD to Outcome Driven Development or ODD. Now high performance is about our ability to ship high quality features and functionality at a high rate of speed. The backlog should shift as well from hypothesis and experiments (though you will still see these from time to time) to features and functionality.

A Couple of Other Considerations

This is of course a simplified scenario to help illustrate the point. The true project team would have product owners and designers and probably subject atter experts all playing their own roles. Roles that may be different in each of the situations. The point here is that the project team needs to be honest with themselves about what they know and where they are in the lifecycle and figure out how best to deploy people and resources towards a common goal of shipping value.

Conclusion

Hypothesis-Driven Development, when properly aligned, can be a powerful methodology to bring engineering and data science team together to deliver value in a well understood and repeatable fashion. By fostering a culture of experimentation, data-driven decision-making, and continuous learning, aligned at the appropriate stage of innovation, HDD ensures that development efforts are focused, efficient, and aligned with business goals. As AI continues to evolve, adopting HDD will be crucial for driving success and delivering impactful solutions.